ICT4E Assessments Help Avoid Wasteful Tragedy

ICTs can be powerful, essential tools for learning: understanding, interpreting and communicating about the real world OR they can be black holes into which we pour our money, intelligence and time, getting very little in return.

Dr. Patti Swarts, Education Specialist (GeSCI)

1. Do we really need to assess ICT4E initiatives?

In a word – yes. Yes we do need to assess ICT4E initiatives more particularly when we are working in environments with scarce resources as in the developing world where investment in ICT can constitute what Unwin (2004) describes as a ‘wasteful tragedy’ if it is not managed and utilized properly.

There is no doubt that ICT in education presents countries with great opportunities. I suppose this would support the perceived notion presented in this forum discussion that the benefits of technology are obvious – on a par with the obvious benefits of electricity. However the use of any technology whether ICT or otherwise also bring challenges – which need to be addressed if the benefits are to be realized.

Some of the ICT challenges in Education relate to, cost, sustainability, optimising usage and making teaching and learning meaningful for students, and relevant for the development of the country. Many policy makers, planners, managers, and practitioners still lack experience, knowledge, and judgment capabilities in the systems, methods, and media involved in what is still an emerging field of ICT in Education.

This lack of experience is particularly evident when dealing with the integration of the newer ICT technologies such as 1:1 saturation models. In my organization, the Global e-Schools and Community Initiative (GeSCI), we believe that assessment is essential for addressing such challenges.

2. Are ICT4E assessments effective in measuring outcomes?

I find that the belief presented in this discussion that ICT evaluations are necessarily flawed – because we do not have the correct tools to assess ICT impact in conventional systems – has some resonance in the literature. In a GeSCI commissioned meta-review research on ICT in Education, Le Baron and Mc Donough (2009) discuss the “grammar of schooling” (Arbelaiz & Gorospe 2009 cited in ibid.) – as in the entrenched practices of conventional schooling where ‘rules constrain transformational curricular innovation especially ICT integration’.

In this context Angeli and Valanides (2009 cited in ibid.) support a view that the transformational impact of ICT would require ‘a clear, commonly understood epistemological framework’ in order for ‘teachers to understand ICT’s transformational potential or for educational decision makers to assess whether or not high standards are being met’.

Despite the lack of such a common framework for understanding and assessment, I would agree with Clayton’s comment in this forum that even if we don’t have a perfect tool to evaluate ICT impact as yet, we should still employ what we have and in the process refine our tools.

3. Do we even have the tools to tell if they are effective? What tools are those?

There are several methods for monitoring and evaluating ICT in Education projects. Two of the most common approaches cited by Wagner et al. (2005) are Outcome Mapping (OM) and Logical Framework Analysis (LFA) – the former focusing on assessing the changes that programmes bring about in the behaviour and actions of stakeholders (e.g. officials, teachers, parents) – the latter focusing on assessing results in terms of products and services (e.g. curricula, teacher training, educational software) of a programme.

4. Are we really using these assessment tools correctly?

There is an emerging view that M&E traditional frameworks are not enough. There is a need to include components of applied research for ‘proof of concept’ – to provide more rigorous, field-tested knowledge about a) what works and why, b) how the initiative could contribute to educational development priorities (access, quality, capacity and relevance), c) the enabling conditions and barriers for taking the initiative to scale, and d) what might be the result of large scale application ( Batchelor & Norrish n.d.).

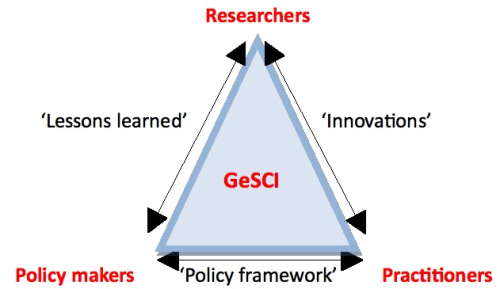

In GeSCI we take the process further – we believe that for M&E/research on ICT in Education to have any impact, the results must inform and shape policies and programmes and be adopted in practice. It is the interaction between these three dimensions that we believe is necessary to maximize the potential of ICT use for transformational impact in education systems.

Figure 1. Stakeholder facilitation in GeSCI research framework

.

5. And regardless of the outcomes, should we really wait for long-term results, or should we implement ICT4E deployments now, as the case is compelling enough already?

In a word – maybe. The case is not compelling. However governments made nervous by the digital / knowledge divide between developed and developing worlds are implementing ICT4E deployments anyway. What we should do is focus our efforts to pursue a deeper and broader research and evaluation agenda.

In my view such an agenda should shift from the current focus of much contemporary ICT –Education evaluation and research on quantitiatively measured learning outcomes (long term results and potentially problematic in terms of cause/effect attribution) – towards a focus on examining the qualitative potentially ‘distruptive’ force of technology such as saturation models to shake the “grammar of schooling” in transofmrative ways.

What do others think? Is the case compelling? Should we assess? What should be the focus of our assessment?

References

- Batchelor, S. and Norrish. P. n.d. Framework for the Assessment of ICT Pilot Projects . Available from infoDev & accessed 10 November 2009

- Le Baron, J. and Mc Donough, E. 2009. Research Report for GeSCI Meta-Review of ICT in Education – Phases One and Two. Available from GeSCI & acccessed 10 November 2009

- Unwin, T. 2004. Towards a framework for the use of ICT in Teacher Training in Africa. Available from infoDev & accessed 11 November 2009

- Wagner, D.A., Day, B., James, T., Kozma, R.B., Miller, J., and Unwin, T. 2005. Monitoring and Evaluation of ICT in Education Projects: A Handbook for Developing Countries. Available from infoDev & accessed 10 November 2009

.

From a western perspective, the benefits of technology are obvious. But in the developing world, perhaps only the benefits of mobile phones, ATMs, and radio seem obvious. When given a choice, local officials would probably spend funds on providing clean drinking water, toilet facilities, medicine, and seeds for crops rather than spend it on ICTs. Local educators would want more teachers not computers. Thus, I believe that evaluations are necessary to demonstrate to the local officials and national policy makers that ICTs are worth the investment. They need to know what local problem(s) ICTs can address or opportunities that are possible.

Because a new technology or method comes along does not necessarily mean that we need a brand new tool to measure changes. We can still use existing evaluation models (providing we know their limitations) such as Tyler’s Model (measuring gains within a treatment group), Stufflebeam’s Context-Input-Process-Product (CIPP) Model, and Stake’s Responsive Model. Or, simply ask: Did the introduction of “x” achieve the project goals? If not, why? In an ideal world, assessments need to be more rigorous. In the developing world, this can be a challenge – especially the achievement of large scale applications unaffected by various intervening variables such as culture. Qualitative studies add depth, but not necessarily breadth.

I agree with you that the results of any assessment and research “must inform and shape policies and programmes”. Having conducted a number of evaluations myself, the real challenge is not about conducting the assessment – the real challenge is to get decision-makers to take action and to act in a manner that benefits the learners and their community in the long-run. You can conduct a variety of assessments and make sound recommendations, but they have limited impact unless decision-makers take action. (Often, once the splashy news/media coverage disappears, the decision-makers do not monitor the project, identify and address challenges, and provide the necessary support.)

Mary et al. From the Phase two Report commissioned by GeSci we have informally discussed the paucity of attention devoted to ICTs in the broad educational literature. ICT specialists seem to be chattering robustly among themselves.

This concern is reinforced by a recent US-based meta-analysis commissioned by the US Department of Education on empirical evidence of online learning outcomes in comparison toF2F or bended settings. The only research findings included in this study were scientific, quantitative approaches comparing outcomes in one environment versus others. It was interesting to note that virtually none of this research targeted the K-12 demographic. Indeed, until 2006, there were no qualifying K-12 studies at all. This, despite the billions of dollars that had already been invested in virtual schooling by that time. Now, there's a lot more to assessment than empirical outcomes-comparing research (apples to oranges?), but learning of its total absence among the studies found valid by the USDoE is a trifle unsettling.

The USDoE Report is available in PDF at

http://www.ed.gov/rschstat/eval/tech/evidence-bas…

I think there is another aspect to consider about introduction of ICTs in education, especially in developing countries.

Normally Education in such countries is very ridgid and bureaurocratic and teaching is generally transferring the teachers information to the students in a top down approach.

I see ICTs as an "agent of change" in such situations, for both teachers and students

So perhaps we sould evaluate what changes have occurred (both in teaching and learning) rather than students test scores. With such feedback, we can introduce a feeback loop into a continuous change model to improve education.

I should add that I am an engineer by training, but have a bit of expereince in introducing OLPC into the Pacific region