Improving ICT Assessment in Education

In this debate there appears to be a lot of consensus on both sides of the motion and even a spill-over in commentary from one side to the other. Perhaps this is because the motion was more of a question than a statement. We have ended up not quite arguing for and against but rather questioning the status of assessment in education in general and its impact (or lack of) in ICT policy and practice in particular. Therein lies most of our consensus.

We recognize the inadequacies of evaluating the use of a tool and its potential for transformational innovation in education systems that are intent on simply harnessing it for maintaining the status quo. As Rob (on the other side) observed ‘any real assessment of educational reform requires a new reflection on what skills and knowledge the children are supposed to acquire at school’.

And so in my response I would like to revisit the question presented by Wayan and reflect a little more on its parameters. I would also like to draw on commentary from both sides of the discussion (quite a lot of comments on your side Rob) to tease out some of the issues.

Do we need to assess ICT4E initiatives?

Rob notes that most reforms have historically been imposed without scientific support but rather on political prejudices. However the sense of fatigue with the failure of education reform syndrome is perhaps changing as we migrate into a 21st century information age. And as we do so, we are witnessing a growing discrepancy between school and the ‘outside world’ – where information, knowledge, innovation and creativity are replacing the traditional sectors of commerce and industry – and where new technologies are changing the way we interact, communicate, socialize and network.

It is a world where mobile connectivity is becoming commonplace and where digital literacy is a critical tool for social interaction, knowledge exchange and construction. If schooling fails to transform itself, it may be transformed albeit haphazardly by the technological transformation outside its gate – and perhaps in a way that may be detrimental to learning.

There is also the challenge of digital divides, both between societies and within societies – with access denied to the poorest and most marginalized. ICT is seen as bridging such major divides. There is thus a renewed sense of urgency, despite the fatigue, for systemic ICT investment and reform to provide all learners with skills they will need for meaningful participation in the economic, social and culture life of new knowledge-based economies and societies.

In such scenarios of massive large-scale investment and reform, assessments are needed to hold systems accountable. Assessments can also provide policymakers with the gateway they need to direct systemic change. As Clayton observes in his comments ‘evaluations are necessary to demonstrate to the local officials and national policy makers that ICTs are worth the investment’. They can help them to identify factors to best influence ICT impact (changes in curriculum, pedagogy, assessment, teacher training) and well as the barriers to ICT use, such as lack of skilled support and adequate infrastructure.

If we assess, how do we do it?

Juan in his commentary describes his skepticism as to the relevance of some of the ICT evaluations he has come across over the years – in particular studies on proprietary software where the emphasis is more on evaluating technology than learning. He notes the lack of comparison with alternative activities and for cost effectiveness. John also comments that effective assessments have not been designed. On cost effectiveness John finds shocking a US study illustrating a lack of empirical research in an area where billions of dollars have been invested. What is particularly ‘unsettling’ is the notion that politicians don’t seem to care.

Yet I wonder John if it is a question that politicians don’t care or that there is a sense of exasperation with the lack of defined mechanisms for informing decision making for such a massive scale of investment and change? Scheuermann, Kikis and Villalba (2009) discuss the lack of clear information in most studies about the multifaced effects and impact of ICT on the learner and learning. It is a situation that is ‘especially unsatisfying for policy-making stakeholders that aim at defining evidence-based strategies and regulatory measures for effective ICT implementation and efficient use of resources’ (ibid. p. 1).

There are calls for more widely accepted indicators and methodological approaches to assess inputs, utilization and outcome/ impact of ICT integration initiatives in order to address this gap (Trucano, 2003; Blanskat et al. 2006; cited in ibid.). Yet there still remain limitations in these approaches on measuring the impact of ICT use – as they often represent a snapshot – a one time, one level approach. Ian comments on the ‘imperfection’ of the data collection in such evaluations more often conducted to appease funder insistence for seeing ‘educational’ results. He also draws attention to the difficulty in attributing the said results to the ICT intervention.

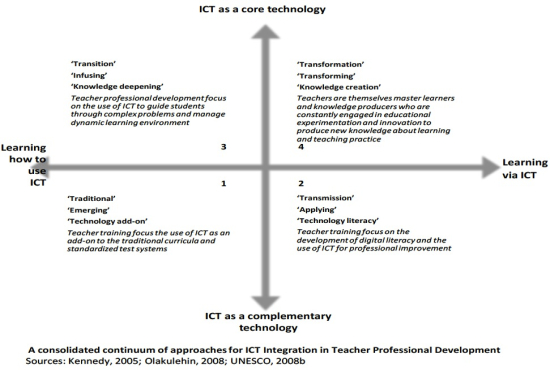

A more powerful approach is the use of indicators within development models of ICT integration in education – to study the progressive phases through which teachers and students adopt and use ICT. Morel’s Matrix is an instrument that can be used for evaluating the degree to which ICTs have been integrated in an educational system through four distinct successive phases: a) emerging, b) applying, c) integrating, and d) transforming.

In GeSCI we have developed an ICT-Education matrix to assist our partners in focusing on what teachers and learners actually do when they use ICTs in schools and institutions through each of the four successive phases (Figure 1). Such models when used to guide Planning, Monitoring & Evaluation (PME) in combination with the indicators approach can offer clearer outcomes on what the integration of ICTs in education should look like at each development stage.

Figure 1: GeSCI ICT- Education Matrix

I like Mark Beckford’s observation in that the key to assessment is to keep it ‘simple but useful’. We hope in developing PME tools as the ICT-Education matrix for our partners that we can do just that.

Related Link

Scheuermann, F., Kikis, K. & Villala, E. 2009. A framework for Understanding and Evaluating the Impact of Information and Communication Technology in Education Available online & accessed 23 November 2009

Mary, I really like the model you introduce in Figure 1. I've always been fond of "magic quadrants." How do you measure where a particular school is in your model?

Mary,

I will kick off the cross commenting with a problem I see in the ICT4E evaluations.

Any halfway thorough evaluation of a new technology in education will take at least three years. In education, the time horizon is in decades. So in general, this time is not a problem.

However, if that technology is a specific ICT technology, none of the devices tested will be available anymore at the end of the study. An example would be a 1:1 laptop initiative, eg, the OLPC. At the end of the test period, new devices with new capabilities and lower prices will be available, making the old evaluation worthless for policy decisions.

Unless, of course, the evaluation is much more general with results that can be transfered to other, newer, devices and technologies.

Your matrix promotes such a more general view.

But can you explain more about how a specific program can be rationally and empirically evaluated for policy purposes without becoming obsolete by the time of publication?

For illustrative purposes, we could take an evaluation of deploying OLPC laptops in a third world country, like Nepal, as an example. But any other example would be fine, eg, the hole in the wall initiative in, say, India.

What studies could be done to decide whether this deployment would be good for education with results valid even when after the study is over, the devices will be different in capabilities and price?

Rob van Son

Hi Rob,

You raise a pertinent question regarding the usefulness of evaluations if the devices are already different in capabilities and price by the time results are disseminated etc.

Perhaps the focus should be not the devices then. ICT does not exist in isolation but within a larger socio-cultural context of the school and education system. In relation to Mark’s question above on school assessment for e-readiness, we see that the use of ICT devices is interconnected with so many other elements in schooling systems – policy, curriculum & assessment, infrastructure, pedagogy, organization & management and professional learning.

Papert (1993) observed that as ICT enters the socio-cultural setting of the school it ‘can weave itself into learning in many more ways than the original promoters could possible have anticipated’ (p53).

So to provide policy makers with useful information we essentially require a more holistic model for studying and understanding how ICT can weave its way into the learning process.

In April of this year we in GeSCI held a North South Partnership Research workshop in Dublin gathering more than 50 participants from different education and research networks to brainstorm exactly the question you present Rob – to ask what kind of models and experiences are there in ICT in Education research and evaluation that can provide our partners with useful information for policy and practice? (see workshop details at: http://www.gesci.org/partnerships.html ).

One approach that was presented in the workshop was an Activity System model derived from Activity Theory (Engstorm 2001) currently being piloted in an Expansionist School Programme in the Southern African Development Community (SADC) Region – in a joint collaboration between the Universities of Botswana and Helsinki (Engstrom 2009).

It is a model that focuses evaluation and research on the activity system (tools, subject, rules, community, labour division, object, outcome) in which the technology is located. It is an approach which seeks to understand the tensions and contradictions that emerge as technology is introduced into school activities – for example as teachers experiment with technology (tools) in their practice to integrate ICT across the curriculum (object), how are classroom activities supported by the broader socio-cultural context of the education system – the curriculum, teaching loads, timetables (rules)? – what needs to be negotiated/ renegotiated in terms of the roles of teacher and learner (division of labour)? – what problems are encountered as you noted in your first posting Rob between the different understandings of technology use of teachers, learners, managers and parents (community)? .

This is a model that has been used successfully used for assessing ICT integration in Singaporean schools through successive Masterplans for ICT in Education – to analyse ‘successes’, ‘failures’ and ‘contradictions’ in their totality at different system levels – and to develop pedagogical models and approaches of ICT integration in schools based on emerging understanding (Lim and Hang 2003).

So whether it’s OLPC or a hole in the wall deployments – if studies focus on the totality of the ‘successful’ and ‘unsuccessful’ elements of integration of the device – then when the study is over and the device is obsolete – policy makers, administrators and teachers are still left with usable knowledge on opportunities and limitations of technology take up and how technology (whatever device it may be) can best be integrated within the broader socio-cultural context of their systems.

Best wishes,

Mary

Engestrom, Y. 2001. Expansive Learning at Work: toward and activity theoretical reconceptualization. Journal of Education and Work [Online].14 (1), pp 133-156. Available from: Academic Search Premier

http://www.library.dcu.ie/Eresources/databases-az… [Accessed 01 April 2008]

Engstrom, R. 2009. Expansive School Transformation in the SADC Region IN Workshop on North/South Research Partnerships for ICT – Education. 21 April 2009, Dublin, Ireland [Online]. Available from: http://www.gesci.org/old/files/docman/Presentatio… [Accessed 24 November 2009]

Lim, C.P. and Hang, D. 2003. An activity theory approach to research of ICT integration in Singapore schools. Computers and Education [Online]. 41, pp49-63. Available from: Academic Search Premier http://www.library.dcu.ie/Eresources/databases-az… [Accessed 15 December 2008]

Papert, S. 2003. The Children’s Machine: Rethinking Schoool in the Age of the Computer. New York: Basic Books

Hi Rob,

You raise a pertinent question regarding the usefulness of evaluations if the devices are already different in capabilities and price by the time results are disseminated etc.

Perhaps the focus should be not the devices then. ICT does not exist in isolation but within a larger socio-cultural context of the school and education system. In relation to Mark’s question above on school assessment for e-readiness, we see that the use of ICT devices is interconnected with so many other elements in schooling systems – policy, curriculum & assessment, infrastructure, pedagogy, organization & management and professional learning.

Papert (1993) observed that as ICT enters the socio-cultural setting of the school it ‘can weave itself into learning in many more ways than the original promoters could possible have anticipated’ (p53).

So to provide policy makers with useful information we essentially require a more holistic model for studying and understanding how ICT can weave its way into the learning process.

In April of this year we in GeSCI held a North South Partnership Research workshop in Dublin gathering more than 50 participants from different education and research networks to brainstorm exactly the question you present Rob – to ask what kind of models and experiences are there in ICT in Education research and evaluation that can provide our partners with useful information for policy and practice? (see workshop details at: http://www.gesci.org/partnerships.html ).

One approach that was presented in the workshop was an Activity System model derived from Activity Theory (Engstorm 2001) currently being piloted in an Expansionist School Programme in the Southern African Development Community (SADC) Region – in a joint collaboration between the Universities of Botswana and Helsinki (Engstrom 2009).

It is a model that focuses evaluation and research on the activity system (tools, subject, rules, community, labour division, object, outcome) in which the technology is located. It is an approach which seeks to understand the tensions and contradictions that emerge as technology is introduced into school activities – for example as teachers experiment with technology (tools) in their practice to integrate ICT across the curriculum (object), how are classroom activities supported by the broader socio-cultural context of the education system – the curriculum, teaching loads, timetables (rules)? – what needs to be negotiated/ renegotiated in terms of the roles of teacher and learner (division of labour)? – what problems are encountered as you noted in your first posting Rob between the different understandings of technology use of teachers, learners, managers and parents (community)? .

This is a model that has been used successfully used for assessing ICT integration in Singaporean schools through successive Masterplans for ICT in Education – to analyse ‘successes’, ‘failures’ and ‘contradictions’ in their totality at different system levels – and to develop pedagogical models and approaches of ICT integration in schools based on emerging understanding (Lim and Hang 2003).

So whether it’s OLPC or a hole in the wall deployments – if studies focus on the totality of the ‘successful’ and ‘unsuccessful’ elements of integration of the device – then when the study is over and the device is obsolete – policy makers, administrators and teachers are still left with usable knowledge on opportunities and limitations of technology take up and how technology (whatever device it may be) can best be integrated within the broader socio-cultural context of their systems.

Best wishes,

Mary

Engestrom, Y. 2001. Expansive Learning at Work: toward and activity theoretical reconceptualization. Journal of Education and Work [Online].14 (1), pp 133-156. Available from: Academic Search Premier

http://www.library.dcu.ie/Eresources/databases-az… [Accessed 01 April 2008]

Engstrom, R. 2009. Expansive School Transformation in the SADC Region IN Workshop on North/South Research Partnerships for ICT – Education. 21 April 2009, Dublin, Ireland [Online]. Available from: http://www.gesci.org/old/files/docman/Presentatio… [Accessed 24 November 2009]

Lim, C.P. and Hang, D. 2003. An activity theory approach to research of ICT integration in Singapore schools. Computers and Education [Online]. 41, pp49-63. Available from: Academic Search Premier http://www.library.dcu.ie/Eresources/databases-az… [Accessed 15 December 2008]

Papert, S. 2003. The Children’s Machine: Rethinking Schoool in the Age of the Computer. New York: Basic Books

Hi Rob,

You raise a pertinent question regarding the usefulness of evaluations if the devices are already different in capabilities and price by the time results are disseminated etc.

Perhaps the focus should be not the devices then. ICT does not exist in isolation but within a larger socio-cultural context of the school and education system. In relation to Mark’s question above on school assessment for e-readiness, we see that the use of ICT devices is interconnected with so many other elements in schooling systems – policy, curriculum & assessment, infrastructure, pedagogy, organization & management and professional learning.

Papert (1993) observed that as ICT enters the socio-cultural setting of the school it ‘can weave itself into learning in many more ways than the original promoters could possible have anticipated’ (p53).

So to provide policy makers with useful information we essentially require a more holistic model for studying and understanding how ICT can weave its way into the learning process.

In April of this year we in GeSCI held a North South Partnership Research workshop in Dublin gathering more than 50 participants from different education and research networks to brainstorm exactly the question you present Rob – to ask what kind of models and experiences are there in ICT in Education research and evaluation that can provide our partners with useful information for policy and practice? (see workshop details at: http://www.gesci.org/partnerships.html ).

One approach that was presented in the workshop was an Activity System model derived from Activity Theory (Engstorm 2001) currently being piloted in an Expansionist School Programme in the Southern African Development Community (SADC) Region – in a joint collaboration between the Universities of Botswana and Helsinki (Engstrom 2009).

It is a model that focuses evaluation and research on the activity system (tools, subject, rules, community, labour division, object, outcome) in which the technology is located. It is an approach which seeks to understand the tensions and contradictions that emerge as technology is introduced into school activities – for example as teachers experiment with technology (tools) in their practice to integrate ICT across the curriculum (object), how are classroom activities supported by the broader socio-cultural context of the education system – the curriculum, teaching loads, timetables (rules)? – what needs to be negotiated/ renegotiated in terms of the roles of teacher and learner (division of labour)? – what problems are encountered as you noted in your first posting Rob between the different understandings of technology use of teachers, learners, managers and parents (community)? .

This is a model that has been used successfully used for assessing ICT integration in Singaporean schools through successive Masterplans for ICT in Education – to analyse ‘successes’, ‘failures’ and ‘contradictions’ in their totality at different system levels – and to develop pedagogical models and approaches of ICT integration in schools based on emerging understanding (Lim and Hang 2003).

So whether it’s OLPC or a hole in the wall deployments – if studies focus on the totality of the ‘successful’ and ‘unsuccessful’ elements of integration of the device – then when the study is over and the device is obsolete – policy makers, administrators and teachers are still left with usable knowledge on opportunities and limitations of technology take up and how technology (whatever device it may be) can best be integrated within the broader socio-cultural context of their systems.

Best wishes,

Mary

Engestrom, Y. 2001. Expansive Learning at Work: toward and activity theoretical reconceptualization. Journal of Education and Work [Online].14 (1), pp 133-156. Available from: Academic Search Premier

http://www.library.dcu.ie/Eresources/databases-az… [Accessed 01 April 2008]

Engstrom, R. 2009. Expansive School Transformation in the SADC Region IN Workshop on North/South Research Partnerships for ICT – Education. 21 April 2009, Dublin, Ireland [Online]. Available from: http://www.gesci.org/old/files/docman/Presentatio… [Accessed 24 November 2009]

Lim, C.P. and Hang, D. 2003. An activity theory approach to research of ICT integration in Singapore schools. Computers and Education [Online]. 41, pp49-63. Available from: Academic Search Premier http://www.library.dcu.ie/Eresources/databases-az… [Accessed 15 December 2008]

Papert, S. 2003. The Children’s Machine: Rethinking Schoool in the Age of the Computer. New York: Basic Books

We are faced with the dilemma of attempting to assess with syntax that policy-makers, funders and MoE types are willing to accept, and the desire to produce meaningful information on what's really happening in schools and for learners. Rob, you raise the interesting question of timeliness. If we tolerate the publication cycle of traditional peer-reviewed scholarship, then we condemn ourselves perpetually to be "fighting the last war" (sorry about the bellicose analogy) and we will always lose it. Moreover, if we rely solely on the narrow focus of much empirical research, we risk discarding the important mysteries for the satisfaction of tracking down trivial detail. (I overstate my case, here, I realize.)

Mary, I agree with Rob that your quadrant helps illustrate the assessment challenge. It reminds me a little of Bloom's Taxonomy, but the matrix allows for greater analytical flexibility, elevating shades of gray to a full color palette. Perhaps assessment should be keyed to a descriptive model describing ideal learning environments and through structured observation, conversation and product-analysis, individual cases could be analyzed against a "best practice" standard.

By the same token for learners, goal-based learning attributes could be described against which learners' achievement might be assessed. Some of this assessment can indeed be quantitative, even test-centered, but not all of it. The point is that success would be measured against things deemed worthy of achieving, and narrative valued as highly as numbers, if not more so.

But I dream — and ramble…. Back to the real world where I'll pick up my laundry, pondering the degree to which my shirts match my ideal model of cleanliness.

Hi John,

Many thanks for these reflections on how to develop the matrix for greater analytical flexibility – and I like the analogy of moving from grey murkiness into the light of full colour – as institutions and schools move towards fuller autonomy and understanding of using ICT for knowledge deepening and creation.

Developing the descriptive model with case studies from partner countries to provide practical ideas for planning, monitoring and evaluation approaches using the matrix is our next stage of the toolkit development.

Yes keep on dreaming and rambling – this is exactly what is needed as we approach these challenges!

Best wishes,

Mary

JLebaron: Yes, YouTube means that you can embed a video in your comment. Intense Debate doesn't support other video services, but as you found it supports links, and even HTML coded links, so enjoy.

Mary,

When you talk about "the use of indicators within development models of ICT integration in education – to study the progressive phases through which teachers and students adopt and use ICT" it sounds to me like you're talking about classic Change Management – the systematic process of creating organizational change.

In that context, I think there should be great agreement. We can assess the amount of chnage (ICT adoption, usage) that happens in an educational system, and draw parallels with other change processes that its often much harder, and more ambiguous to measure the impact of that change.