Technology and Child Development: Evidence from One Laptop per Child Program in Peru

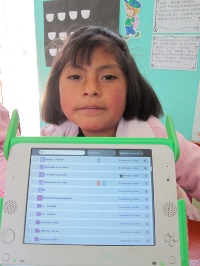

The One Laptop per Child (OLPC) program aims to improve learning in the poorest regions of the world though providing laptops to children for use at school and home. Since its start, the program has been implemented in 36 countries and more than two million laptops have been distributed.

The investments entailed are significant given that each laptop costs around $200, compared with $48 spent yearly per primary student in low-income countries and $555 in middle-income countries (Glewwe and Kremer, 2006). Nonetheless, there is little solid evidence regarding the effectiveness of this program.

Technology and Child Development: Evidence from the One Laptop per Child Program presents results from the first large-scale randomized evaluation of OLPC. The study sample includes 319 public schools in small, poor communities in rural Peru, the world’s leading country in terms of scale of implementation. Extensive data collected after about 15 months of implementation are used to test whether increased computer access affected human capital accumulation.

The main study outcomes include academic achievement in Math and Language and cognitive skills as measured by Raven’s Progressive Matrices, a verbal fluency test and a Coding test. The Ravens are aimed at measuring non-verbal abstract reasoning, the verbal fluency test intends to capture language functions and the Coding test measures processing speed and working memory.

Exploring impacts on cognitive skills is motivated by the empirical evidence suggesting that computer use can increase performance in cognitive tests and the strong documented link among scores in these tests and important later outcomes such as school achievement and job performance (Maynard, Subrahmanyam and Greenfied, 2005; Malamud and Pop-Eleches, 2011; Neisser et al., 1996). Additionally, the software loaded on the laptops contains games and applications not directly aligned with Math and Language but that potentially could produce improvements in general cognitive skills.

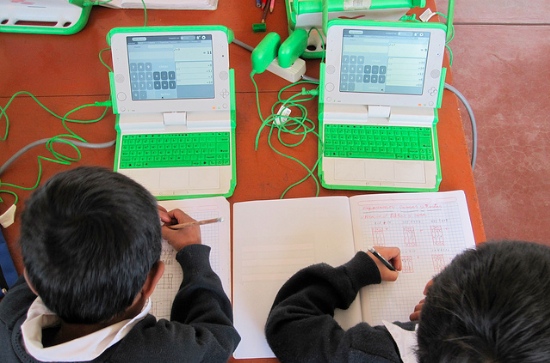

Our results indicate that the program dramatically increased access to computers. There were 1.18 computers per student in the treatment group, compared with 0.12 in control schools at follow-up. This massive rise in access explains substantial differences in use. Eighty-two percent of treatment students reported using a computer at school in the previous week compared with 26 percent in the control group. Effects on home computer use are also large: 42 percent of treatment students report using a computer at home in the previous week versus 4 percent in the control group.

The majority of treatment students showed general competence in operating the laptops in tasks related to operating core applications (for example, a word processor) and searching for information on the computer. Internet use was limited because hardly any schools in the study sample had access. Turning to educational outcomes, we find no evidence that the program increased learning in Math or Language. The estimated effect on the average Math and Language score is 0.003 standard deviations, and the associated standard error is 0.055.

To explore this important result we analyze whether potential channels were at work. First, the time allocated to activities directly related to school does not seem to have changed. The program did not affect attendance or time allocated to doing homework. Second, it has been suggested that the introduction of computers increases motivation, but our results suggest otherwise – we do not find impacts on school enrollment.

Third, there is no evidence the program influenced reading habits. This is perhaps surprising given that the program substantially affected the availability of books to students. The laptops came loaded with 200 books, and only 26 percent of students in the control group had more than five books in their homes.

Finally, the program did not seem to have affected the quality of instruction in class. Information from computer logs indicates that a substantial share of laptop use was directed to activities that might have little effect on educational outcomes (word processing, calculator, games, music and recording sound and video). A parallel qualitative evaluation of the program suggests that the introduction of computers produced, at best, modest changes in pedagogical practices (Villarán, 2010). This may be explained by the lack of software in the laptops directly linked to Math and Language and the absence of clear instructions to teachers about which activities to use for specific curricular goals.

On the positive side, the results indicate some benefits on cognitive skills. In the three measured dimensions, students in the treatment group surpass those in the control group by between 0.09 and 0.13 standard deviations though the difference is only statistically significant at the 10 percent level for the Raven’s Progressive Matrices test (p-value 0.055). Still, the effects are quantitatively large.

A back-of-the-envelope calculation suggests that the estimated impact on the verbal fluency measure represents the progression expected in six months for a child. The average sixth (second) grader in the control group obtains 15.9 (7.1) correct items on this test. Hence, assuming that the average child takes four years to progress from second to sixth grade, the annual average progression is about 2.2 items. The estimated impact is 1.1, hence it represents half a year of normal progression.

Similarly, the estimated impact for the Coding and Raven tests accounts for roughly the expected progression during five and four months, respectively. We summarize the effects on cognitive skills constructing a variable that averages the three mentioned tests. Results indicate an impact of 0.11 standard deviations in this measure that corresponds to the progression expected in five months (p-value 0.068).

Our results relate to two non-experimental studies that have used differences-in- differences strategies to assess the effects of OLPC on academic effects, finding conflicting results. Sharma (2012) estimates the effect of an NGO-conducted small pilot benefiting students in three grades in 26 schools in Nepal, finding no statistically significant effects in Math and negative effects in Language. Ferrando et al. (2011) explore the effects on 27 schools that participated in the OLPC program in Uruguay and find positive statistical effects on both Math and Language.

Our work also relates to a growing literature that uses credible identification strategies to assess the effects of computer use on human capital accumulation. A set of studies have analyzed the effects of public programs that increase computer access and related inputs in schools finding typically no impacts on Math and Language (Angrist and Lavy, 2002; Leuven et al., 2007; Machin, McNally and Silva, 2007; Barrera-Osorio and Linden, 2009).

A second group of studies has explored the effects of providing access to specially designed academic software to students and has documented in some cases, though not all, positive impact on Math and Language (Dynarsky et al., 2007; Banerjee et al., 2007; Linden 2008; Barrow, Markman and Rouse, 2009).

Recently, researchers have focused on the effects of home computer use, and the results have been mixed. Fairlie and London (2011) report positive effects on a summary of educational outcomes whereas Malamud and Pop-Eleches (2011) find negative effects on school grades but positive effects on the Raven’s Progressive Matrices test.

Technology and Child Development: Evidence from the One Laptop per Child Program contributes to the literature on technology in education in several ways. First, we explore the effects of a program that intensively introduced computers at both schools and homes. The intervention was performed at the community level, allowing the incorporation of general equilibrium effects that prior studies could not identify. General equilibrium effects may arise if effects for individual students change as the percentage of their peers that are beneficiaries increases.

Second, we analyze this increased access in an ideal setting composed of many isolated communities with low baseline access to technology. The communities’ isolation precludes potential spill-over effects across study units that could contaminate the design. The low levels of baseline technology diffusion allow the intervention to produce substantial changes in both access to and use of computers.

Third, we obtain clean evidence from a large-scale randomized controlled trial involving thousands of students in 319 schools. Fourth, we not only measure the effect on academic achievement but also analyze the impact on cognitive skills and exploit computer logs to elicit objective data regarding how computers were used. Finally, our findings on the effects of the OLPC program in Peru contribute to filling the existing empirical vacuum concerning one of the most important and well-known initiatives in this area.

The remainder of the paper is organized as follows. Section 2 provides an overview of the education sector in Peru, the OLPC program and its implementation in Peru. Section 3 describes the research design, econometric models and data and documents the high balance and compliance of the experiment. Section 4 presents the main results and Section 5 explores heterogeneous effects. Section 6 offers a discussion of the main findings, and Section 7 concludes.

Download Technology and Child Development: Evidence from the One Laptop per Child Program

Thank you for sharing this paper!

The report says that 13% of XO laptops in Peru malfunctioned at some point, and about half of them were successfully repaired. Yet in Uruguay, which has a much more efficient XO laptop maintenance and repair system, still had 27% of the XOs under repair at any given time. This makes me think that either children in Peru are much more careful with their XO laptops, or they are using them significantly less than in Uruguay.

There are many other possible explanations. Not every batch of computers that come off the line of a manufacturer are the same: different suppliers, different versions of components, design changes, etc. For example, there was a change made by the display manufacturer that is suspected in a system failure of some machines in Uruguay that was subsequently corrected. This resulted in a spike in broken displays that only appeared in Uruguay. I would take a closer look at the data before jumping to conclusions about usage.

Is this data publicly available somewhere? Else it's hard to try to include it in process of drawing conclusions…

I believe that children in Uruguay mostly take their laptops home, right? According to the IDB report, the majority of children in Peru do not take their laptops home.

We were also a bit surprised by this number as we expected a higher fraction of laptops to have encountered technical problems. However, we tried to elicit the same information from students, teachers and directors and in general the aggregate estimates were quite similar. It may be the case that laptops are dealt in a more careful way in rural areas rather than in urban areas. Counting with data from more deployments around the world could give a clearer picture.

If you are wondering what a Raven’s Progressive Matrices test is, take it here: http://www.raventest.net/

Regarding how to interpret the cognitive gains, there are a number of good books on the topic. There is one in particular that present the main concepts and empirical evidence for a general audience. The title is "Intelligence: A Very Short Introduction" and the author Ian Deary (ISBN 0192893211). A great paper on this subject is "Intelligence: Knowns and unknowns". This articule was the result of the joint work of a number of experts in the field in the mid 90s. You can download it from: http://www.gifted.uconn.edu/siegle/research/Correlation/...

The gain in cognitive skills are impressive. With about 5 months advancement in cognitive ability compared to the non-XO group PLUS computer literacy they are ready to move ahead. Think about a child who can now walk – they have a different view of the world and opportunities for even MORE learning. If I have a chance I would like to replicate with a small groups of children as this is emerging proof of technology can accelerate development if well used.

"We turn to the core question of the paper: did increased computer access affect academic and cognitive skills? Table 7 shows that there are no statistically significant effects on Math and Language"

I agree that the gains in general cognitive skill are something to look into further. Five months is noteworthy, and even more so considering cognitive development is often an exponential growth curve. The fact that academic Math and Language academic achievement were not found to have been significantly affected by the program should not be used to dampen or disregard the effects found in general cognitive skills. The research cited in the report to this point further emphasizes the reasoning:

From p. 2 "…computer use can increase performance in cognitive tests and the strong

documented link among scores in these tests and important later outcomes such as school

achievement and job performance (Maynard, Subrahmanyam and Greenfied, 2005; Malamud

and Pop-Eleches, 2011; Neisser et al., 1996)."

I don't want to suggest that math and language skills are not important, but honestly, which is going to be more useful to these children in the linger term: that they remember some facts (measure by the standardized test)? or that they can think and communicate in a more sophisticated way?

The fact that academic impact in math and languages is not seen, but development of cognitive skills is may sugest that we need to look at those standardized test to see if they really measure real learning of math and languages.

Thank you for this report, and your incredibly important work! While the lack of impact that OLPC has had on educational outcomes is somewhat disheartening (although not altogether surprising), it's only though rigorous evaluation of ICT4E projects that we will be able to drive home the need to stop repeating past mistakes. Incorporating these lessons learned into future projects can only make them better. It's great to see researchers taking up this challenge!

I must say that while it is interesting to read the paper and watch people come up with various conflicting observations, the analysis itself has nearly al the challenges of looking into the future with the familiar grid of the past.

I have seen the progress made by a few hundred students in various schools in India where the program has ben taken up voluntarily and the result are way more impressive than Peru's. Of about 1000 children, there have been fewer than 10 percent challenges with the maintenance of laptops. The children have learnt to read, write, do the numbers, learn to play with small programmes, draw, paint, speak etc better than anyone imagined possible since India became free.

Just about anyone who has been to these schools, including government officers, businessmen, corporate executives, teachers, NGOs, journalists and anyone who cared to visit has returned with almost "spiritual" an experience watching children under all the infrastructure challenges learn like never before.

My take is that those who want to see the impact of any new initiative that goes beyond the vision of people must understand what they are measuring first. Using a model built on measuring the efficiency of horse carriages to understand the meaning of cars will unlikely be any help in moving to the automobile revolution. Of course we may find many vocal enemies of automobile a hundred years later. But is that where we are headed with OLPC?

Wayan has done more damage to the diffusion of OLPC than he would like to concede or believe. But he is clearly using the models relevant for horse carriage era to appreciate the meaning of automobiles.

If I truly did damage to the diffusion of XO laptops in the manner as conceived by Negroponte, then I will consider my efforts successful beyond my wildest dreams. You seem to think I am anti-technology or anti-XO laptop. I am neither. I am anti-implementation miracles where helicopters are substituted for the real effort involved in changing education, regardless of technology.

You are so right, it's people trying to reinforce what they believe and pretend to be objective who really place barriers to innovation. Let's hope they don't stop it.

I agree with you. I think it is important to highlight and be explicit about the standards of those who evaluate, if they did bring their own criteria.

In my experience, positive impact at small scale is easier to achieve (you can guarantee it), but it is a complete different challenge when you face a large scale program. Like many of you, I am still interested in learning about the conditions that lead to great/positive change… at this large scale.

A computer is a tool and not a panacea. If you gave everyone a hammer it doesn't make them automatically capable of building a house or atleast not a good one. But people with tools are generally better off than people without. A computer for every child cannot/should not replace educational policy, systems and teachers. It does not absolve politicians of the burden of decisions regarding education.

I confess I did not read the report as it's not my field and probably over my head but did the Ministry of education in Peru move the curriculum textbooks to the computer, hopefully in an interactive format? There should be some savings over time there atleast to offset the cost of the OLPC-program.

Seventy weeks ago, Carlos VR published a response in the post titled OLPC in Peru: A Problematic One Laptop Per Child Program by Christoph Derndorfer. Carlos VR indicated that:

"With relation to empirical Evidence, excepting the study of Baerjee et al. [1] Which Showed Some short-term effects of ICT in academic performance, MOST of Evidence of Positive Impact of ICT in academic performance is based on Correlational studies, not strong research designs.

On the Contrary, Evidence That shows limited or no effects of ICT in academic performance are based on randomized experimental designs and multilevel modeling (strong and poweful research designs). In the Following you can find more papers showing Evidence That pro-ICT Discourse is more myth than a reality based on Evidence for:

Angrist, J. & Lavy, V. (2002). New Evidence on classroom computers and pupil learning. The Economic Journal, 112, (October), 735-765.

Barrera-Osorio, F. & Leigh L. (2009). The use and misuse of computers in education: Evidence from a randomized experiment in Colombia. Policy Research Working Paper Series 4836, The World Bank.

Fuchs, T. & Wößmann, L. (2004). Computers and student learning: bivariate and multivariate Evidence on the availability and use of computers at home and at school. Brussels Economic Review 47 (3-4), 359-385.

Linnakylä, P., Malin, A. and Taube, K. (2004). Factors behind low reading literacy achievement. Scandinavian Journal of Educational Research, 48 (3), 231-249.

Rouse, C. & Krueger, A. (2003). Putting computerized instruction to test: A randomized evaluation of a "scientifically-based" reading program. Economics of Education Review, 2004, 23 (August), 323-338.

[1] Banerjee, A., Cole, S., Duflo, E., Linden, L. (2007). Remedying education: evidence from two randomized experiments in India. Quarterly Journal of Economics, 122 (3), 1235-1264. "

According to documents cited by Carlos VR was possible to estimate the results in Peru were similar to those found in other experiences around the world, in other words, there would have been the need for a randomized, experimental.

Recall that in Peru Census Student Evaluations (ECE, in spanish) conducted every year for the Unit of Educational Quality Medication (UMC in spanish) that measure reading comprehension and logical mathematical thought in the second and fourth grade is not yet linked to the use of information technology although these assessments were initiated in 2007, the same year it was created DIGETE and approved the One Laptop per Child Program, which incidentally was a bad idea for our country.

However, following the track that left Claudia Urrea in his doctoral thesis of longitudinal research strategy would allow mixed and combined methods measure the impact of IT in Math and Language fairly even between courses. The objective of this research would be the re-structuring of the Peruvian public school towards a better coordinated and adapted to national conditions.

[[Urrea, C. (2007, September 14). One to one connections: Building a Learning Community Culture. Massachusetts Institute of Technology, MIT, Boston, Massachusetts. Retrieved from http://dspace.mit.edu/bitstream/handle/1721.1/417…

This is the path that has already been outlined by the education secretary of the United States

<<Redesigning for improved education in America is a complex challenge Productivity That will require all 50 states, the districts and schools Thousands of across the country, the federal government, and other education stakeholders in the public and private sectors coming together to design and Implement solutions. It is a challenge for educators – leaders, teachers, and policymakers Committed to learning – as well as Technologists, and education leaders and technology Ideally an Expert will come together to lead the effort. p. 64 "Office of Educational Technology, U. S. D. of E. (2010). Learning powered by technololgy. Transforming American Education (Draft., Vols. 1-2, Vol 1). Office of Educational Technology. Retrieved from http://www.ed.gov/sites/default/files/NETP-2010-f…

Finally, in Peru will review the results of this report to learn not to do when we try to introduce information technology in the national education system.

Peruvian officials say a fire in the government's main educational materials warehouse has destroyed half a million books, 61,000 laptop computers and 6,000 solar panels. The material was destined for rural schools and the blaze struck just as Peru's school year begins. Education Minister Patricia Salas puts the damage at $103 million.The computers destroyed overnight Friday were $200 XO models purchased from the U.S.-based One Laptop Per Child Association, which seeks to provide the world's most isolated and poorest children with rugged, low-cost computers. It took firefighters 11 hours to control the blaze, which is under investigation: http://abcnews.go.com/Technology/wireStory/fire-d…

One thing we need to keep in mind in this discussion is which input are we measuring. In reality, it is the instructional design that we are measuring through the learning outcomes of the students, and technological resources are only one part of instructional design. If the OLPC computers do not have carefully designed instruction that meets the needs of the learners, than are we surprised that reading and math skills were not improved? Asking this would be like wondering why an experiment between feeding children junk food from a plate versus feeding them junk food from the floor did not lead to differences in obesity measures. It may impact food-born illness measures (assuming the plates are clean and the floor is not). But that is all we can expect.

Instead, we need to design instruction that takes advantage of the capabilities of the technology. The report said students were not using the laptops for educational purposes as much as other purposes (videos, music, word processing, games). This should be seen as an opportunity, not a failure of the medium. All of those capabilities of the technology can be used to turn traditional methods very quickly and easily (in most cases this should not take 4x as much time as has been mentioned, but rather with only a little extra lesson planning time) into learning activities that are individualized, interactive, collaborative, project-based, constructionist (not a misspelling of constructivism, but Papert’s idea that students can learn more deeply through constructing things, such as making videos, etc.), student-centered, etc. etc. etc.

For instance, for a literacy lesson, teachers could ask students to go around their community with their laptops taking pictures of objects that start with a certain letter. They then audio record themselves saying the names of the objects. They then import these sounds and pictures into a simple word processing page with pictures and sounds and the written word for each word, creating their own multipmedia alphabet books. Students will understand much more deeply the sounds of those words, and how they link to the letter when they are creating these projects, rather than simply memorizing letters and reciting them in class. The learning that takes place here has more to do with how the instruction was designed (addressing learners needs, linking to specific learning outcomes, integrating well with technology, providing learners opportunities to practice new skills, and involving adequate assessment and feedback) than simply what technology is used.

Agreed. Teachers could do many things, and many things better, by utilizing the XO laptops. But what is missing is the support (training, permission to experiment, even to take laptops home) that could make that teaching possible. From what I heard and read about the Peru program, teachers were often considered a hindrance to be gotten around vs. a primary stakeholder to be nurtured and leveraged for a greater success, like in Uruguay. Though its true that teachers only got support in Uruguay after there was much push-back by teachers and their unions on the issue of teacher inclusion in the intervention.

We carefully designed a 40 hour training program forvteachers to use the XOs in a pedagogically sensible way and 8 hours were devoted to maintenance of the units. That should have been enough for averagely well trained teachers, something pitifully we did not have. I understand the new administration is trying to focus its attention on teacher training and support. I hope the best for their efforts.

"If the OLPC computers do not have carefully designed instruction that meets the needs of the learners, than are we surprised that reading and math skills were not improved?" The computers have not instruction because they are designed for learning. I like your example of children using their computers to take pictures and gather information in their communities… that is exactly the kind of software available in the XO (OLPC Computer).

OLPC values open-ended software that allow students and teachers to create their own experiments, data-visualization, simulations. I invite you to look at these examples: http://wiki.sugarlabs.org/go/Math4Team/Florida

I agree with you, it takes not only training, but a lot of support and development for people to get there, but it is happening. There are teachers as well as students creating work beyond those examples.

Over at the World Bank Development Impact Blog, Berk Ozler has a long, interesting post on OLPC evaluations:What I want to talk about is not so much the evidence, but the fact that the whole thing looks a mess – both from the viewpoint of the implementers (countries who paid for these laptops) and from that of the OLPC.<Yes, he is a little late to the debate, but he makes good points. The first one is the basic question I've been asking about OLPC for 6 years now:Let’s go back and think for another second about whether it would be reasonable to expect improvements in mastery of curricular material if we just give each student a laptop in developing countries.Overall, its worth the read, and the comments below the article are telling. There are still those in ICT4E that don't seem to have learned the OLPC lesson: you cannot just drop off a techno-gadget and expect long-term change without a long-term change strategy and intervention.

I have posted a response in the same blog

There were some of us that got this before the whole OLPC thing became so 'popular'. Curriculum is king, but at the very bottom of it is a largely undefined idea of what education is supposed to accomplish and how it should be measured.

Ideal conditions for any education evaluation are extremely difficult to come by. The IDB response to Berk's post is worth reading: http://blogs.iadb.org/desarrolloefectivo_en/2012/…